What is Airflow?

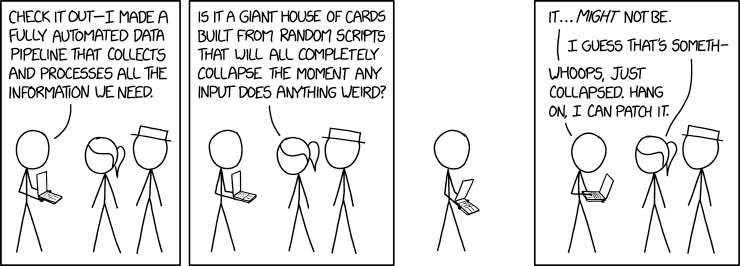

One of the cornerstones of data engineering is job orchestration. Once you have build your fancy transformation code, that takes data from millions of sources, cleans it and feeds it into your beautiful dimensional model - you need a way to automate it.

Long gone are the days of bash scripts triggered by a cron schedule, all running on a laptop plugged in 24/7 under your desk at work!

Apache Airlfow is a common orcehstration framework used by data engineers to schedule pipelines. Since it uses Python for the writing of workflows, it makes it very convenient and accessible to developers. Some of the other things it brings to the table:

- Scalable: You are in total control of the workflow, so can be written in a way to scale to really complex scenarios

- Extensible: It is extensible by nature, allowing you to build up on the provided Operators and write your own

- Dynamic Pipeline Generation: Pipelines/DAGs are created on te fly upon building the container, this allows for simplication use cases like Templated Workflows

- Monitoring and Logging: It comes built in with a dandy logging setup

- Simple Scheduling: You can use CRON expressions

- Open Source: Transparency and great support by the community

It is great, and the perfect tool to add to your belt if you are getting started.

Your First Pipeline

Setting up Airflow

We’ll keep it simple in this example - however, depending on what you are trying to achieve with your pipeline, the requirements you will need will vary.

You will need Python. I would also recommend to set up a virtual environment as a best practice (with Python venv for example). Finally, pip install whatever version of airflow you will be using

|

|

The airflow db functions as your central repository of DAG states. All of the states and history of DAGs is referenced from here - It serves data to the webserver (UI) and scheduler. Along with the scheduler, it is the core of airflow operations.

Creating a Simple Data Pipeline

All airflow operations are based on Directed Acyclic Graphs or DAGs. You can think of DAGs as the building blocks of your pipeline, they contain the instructions of what actions your pipeline will do and in what order. Here is the official explanation from the docs.

There are three different ways you can define a DAG. Here is an example of what a DAG code would look like - using the standard constructor:

|

|

Operators are the actions your DAGs will do - in this case the DummyOperator does nothing, I am using it to simply showcase the syntax of a DAG. Operators are what make airflow so useful. There are tons of them and the community support is great.

Personally, a lot of the pipelines I write use Cloud services from GCP - airflow provides some great GCP operators, see them here. They also provide AWS, Azure and lots more.

Extending our Example

Let’s create a BashOperator task for example - it would look something like this if we add it to our DAG above:

|

|

Running your DAG

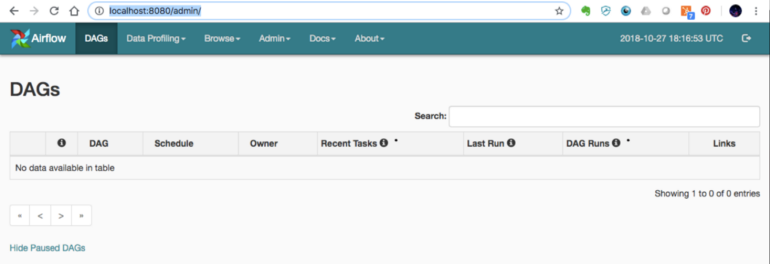

The main you will be interacting with your DAGs is through the Airflow UI. To get this locally, you will start the webserver. The following command will spin up the webserver in your localhost port 8080

|

|

For any scheduling to work, you will then need to run the airflow schduler - run this command

|

|

Once your run these two, you should be able to access the web UI - it should look somehting like this

Things to Consider…

This was a basic overview of what Airflow is and what is can do. There are several things to keep in mind however when we are talking about data engineering production systems.

Docker

One standard practice I did not cover here is the use of Docker containers - This makes local development less of a hassle, you are running everything within its own secluded environment in your local machine. It also makes it so that deployments to the cloud are painless - all your dependencies are managed in your Dockerfile

Docker Compose can also be used to simplify the local dev experience - instead of spinning up several containers and executing separate commands to run the webserver, scehduler, db, etc. you can manage all of it with a single command. Some SaaS companies are basically built on this idea, taking open source software and making it easy to integrate to enterprises. Take a look at Astronomer for example.

Cloud

Leads me to the second topic which is hosting. Airflow will in essence generate an application - which needs resources to run 24/7. Running it locally means that it is still hosted on your laptop - for enterprise and production level solutions this is not enough though.

It is common practice to have dedicated hardware (either company owned or Cloud platform) that will be running your orchestrator 24/7. This is what enables full automation of your data pipelines.

In some companies, part of Data Engineering is to do the platform work required to have these stable solutions - where you will dabble into areas of software engineering and DevOps.

Concluding

Apache Airflow can be considered the industry standard for pipeline orchestration. It is an extremely useful and flexible tool for data engineers to create complex data automations. It is also a great introduction to software best practices and even cluster management - because of how it works under the hood.

Let me know if you would like a more in depth example!